When Google announced that its Photos App came with the ability to take and store an “unlimited” number of pictures I knew there was a catch, but I’ve been waiting to learn exactly what that catch would start to look like. Now, with what Google researchers have just revealed, I believe we are seeing the beginnings of it.

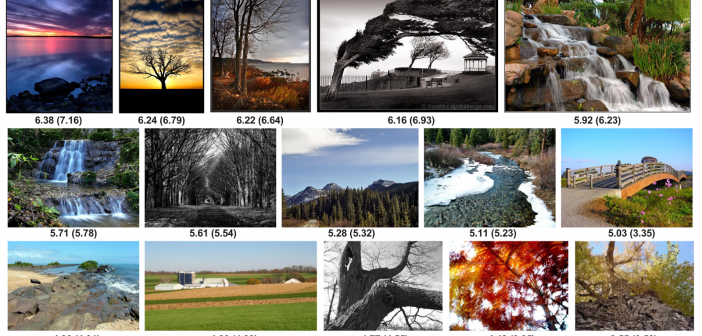

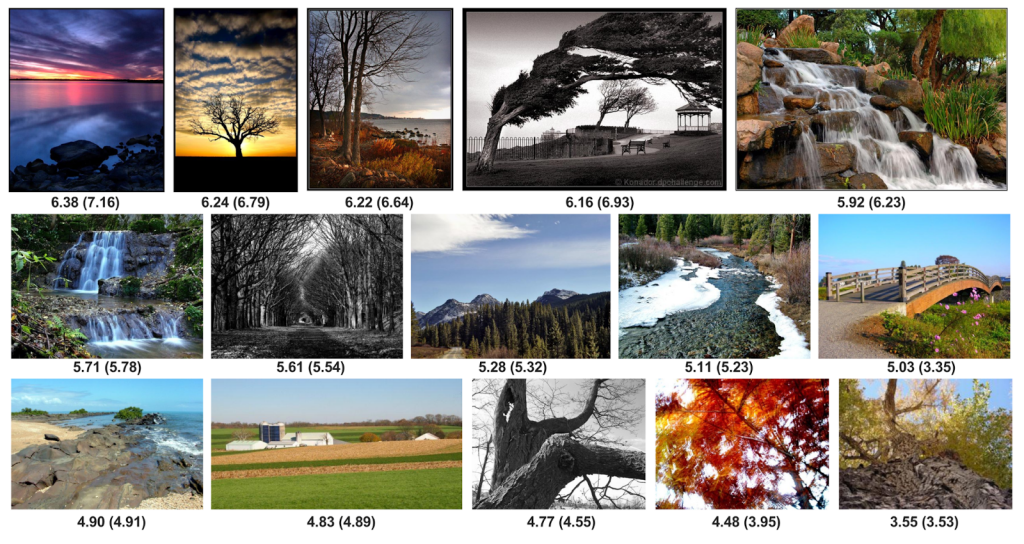

Ranking some examples labelled with the “landscape” tag from AVA dataset using NIMA. Predicted NIMA (and ground truth) scores are shown below each image.

There are universal rules that decide what works in a photo and what doesn’t, most people can tell if an image is “off” even if they can’t articulate exactly why that is. And of course there are age-old schemes such as “The Rule of Thirds” and other mathematical theories around composition. When it comes to human faces, we typically find symmetry will yield the most positive responses when exploring attractiveness. Balance in a frame is another factor that well-trained eyes can instantly spot when deciding which images to publish and which images to bin.

Using the millions of images users are uploading to its servers daily, Google is starting to learn what works and what doesn’t. So much so that this particular group of researchers has now claimed that they can teach AI using deep learning to tell us when an image is aesthetically pleasing.

Publishing their findings to the Google research blog, the group said: “In “NIMA: Neural Image Assessment” we introduce a deep CNN that is trained to predict which images a typical user would rate as looking good (technically) or attractive (aesthetically). NIMA relies on the success of state-of-the-art deep object recognition networks, building on their ability to understand general categories of objects despite many variations.

“Our proposed network can be used to not only score images reliably and with high correlation to human perception, but also it is useful for a variety of labor intensive and subjective tasks such as intelligent photo editing, optimizing visual quality for increased user engagement, or minimizing perceived visual errors in an imaging pipeline.”

Not convinced? Well it seems Google may be on to something. Of the 200 participants in the study, the Neural Imaging Assessment provided ratings that closely matched the human scores on each image tested.

There are already apps like EyeEm Selects that can help users decide which images to post to Instagram for maximum impact, so it seems the beginning of the end is already well on its way people. Soon computers, phones and street cameras will be able to tell if we’re hot or not. Who knows what sinister repercussions could be on the cards for the future, but it all sounds very Black Mirror to us.